Reflection: Hyperlinked Environments

For this Reflection Post I thought it a good idea to lean into an issue that I am struggling with – that is the seemingly appodictic rise of LLM-based “AI” services and tools. We are seeing this in the news, San Jose State itself sits in the middle of Silicon Valley and has embraced the technology in many ways. See the school’s Vision statement here.

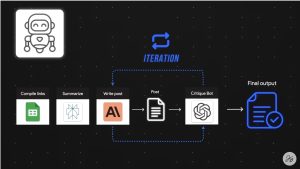

There are many discussions of the technology and the nuance between issues of large language model systems, the applications that are built on top of that, and AI workflows that are the scripted behaviors we can task these systems to do and of course the “application” or “bundle” of these tasks that becomes AI “agents”. The video linked in the course module is very clear at walking us through the differences and the state of things. I’m going to return to it as an example of one of my concerns in a moment.

And of course we have “generative AI” like Midjourney as well as audio and video creation tools that are known as “deep fake” generators. In the creative technology space – video games – digital video and audio editing – the use of “ai” has long been a part of the workflow and labor-scape. Machine Learning (ML) is a technique that powers video game NPC behavior and enables plugins that do “miraculous things” like automatic noise removal from audio recordings. However, that hasn’t been confused with true creativity until new “generative” ai systems emerged on the scene.

The Motion Picture Sound Editors professional organization has banned any submission for awards consideration that was built with generative AI. Their position is that if it is an effect, sound, or ambiance taht you could have created yourself, but instead asked an automated system to make for you, then you are not the creator, and therefore cannot win an award for it. They reward the human in their industry. Read their press release here

Shell Game Season 2 is an experiment? Performance art? Product? Content? By journalist Evan Ratliff, and whatever you want to call it, it has an almost a Netflix series worthy plot where a journalist documents creating an entire company using Chatbot “Agents”. The company’s business/product? Chatbot Agents. It is surreal.

And it highlights very clearly my current concerns about LLMs, the increasing sophistication of workflows and the embodiment of them in “agents”. If we look at a couple of screen shots from the linked video we can see where the example workflow shows the host asking the LLM to do things – Find and Read articles

Then write the post…

Then post the posts…

Nowhere did the host describe reading the articles themself, to oh I don’t know, make sure the summary is good? They mention reading the summarized post to make sure its funny enough… Certainly there is a lot of human intervention in the “workflow” stage they describe, but the goal is get the system to do something automatically, easily, with no real human intervention. My problem, currently, is that the thing he is automating is reasoning and synthesis – read these articles, summarize and synthesize – then post in their name – which is supposed to make the reader feel / trust / approve of the host. When they in fact didn’t do that work. The email request is the thing – as a friend of mine framed it: if you couldn’t be bothered to write an email requesting a meeting, I won’t waste my time meeting with you.

I know that this is singular example – these systems are being positioned in the marketing realm – tools that make it easier for influencers to influence – and not representative of the myriad domains in which ai systems are affecting our world, especially how it will impact our LIS world.

In a recent talk Sol Werthen, a MLIS student at UCLA addressed an aspect of this at a conference where they advocated for the human interaction in the reference interview process, contrasting it with the sycophantic interactions that chatGPT offers when students engage with it to try to find research help. I wrote about the conference here – and I hope to update it with more information after the slides are shared. In listening to working librarians and engaged students at the conference I did develop some optimism regarding the human-centered and ethical use of these tools.

Edit:

From my Digital Asset Management class readings were seeing a lot of related spaces where AI is intersecting with LIS domains and knowledge work

The Act That Changes Everything: Why DAMs Are Now Liable for Metadata

Metadata in the Age of AI: From Creation to Curation

Effective Risk Management strategies when using AI for DAM

The Hard Truth About Human-Like AI Conversations